Research

Projects in submission have had their titles changed to comply with the double-blind review process.

Names with an asterisk (*) denote equal contribution

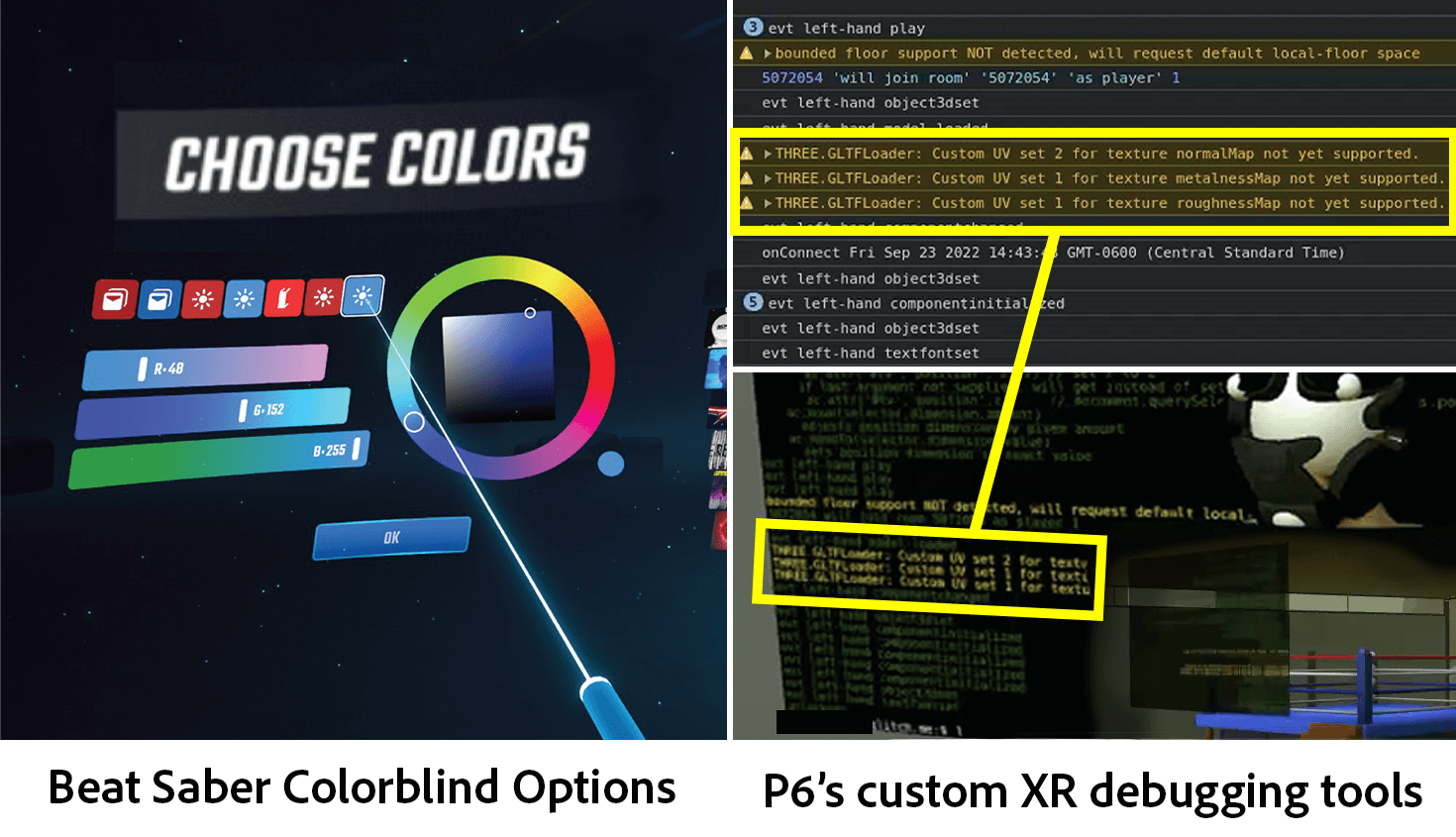

How Well Can 3D Accessibility Guidelines Support XR Development? An Interview Study with XR Practitioners in Industry

Daniel Killough, Tiger F. Ji, Kexin Zhang, Yaxin Hu, Yu Huang, Ruofei Du, Yuhang Zhao

Evaluating existing 3D accessibility guidelines with XR practitioners across different levels of industry and why they don't fully support XR development. Also see our work 'XR for All' for an extended version of this work, which includes additional perspectives on a11y development as a whole.

VRSight: AI-Driven Real-Time Scene Descriptions to Improve Virtual Reality Accessibility for Blind People

Daniel Killough, Justin Feng*, Zheng Xue "ZX" Ching*, Daniel Wang*, Rithvik Dyava*, Yapeng Tian, Yuhang Zhao

Using state-of-the-art object detection, zero-shot depth estimation, and multimodal large language models to identify virtual objects in social VR applications for blind and low vision people. *Authors 2-5 equally contributed to this work.

XR for All: Understanding Developer Perspectives on Accessibility Integration in Extended Reality

Daniel Killough, Tiger F. Ji, Kexin Zhang, Yaxin Hu, Yu Huang, Ruofei Du, Yuhang Zhao

'Deluxe/Extended Edition' of our CHI 2026 Guidelines paper, analyzing developer challenges on integrating a11y features into their XR apps. Includes additional perspectives from practitioners alongside guideline evaluation, covering why/why not they develop a11y features for people with visual, cognitive, motor, and speech & hearing impairments.

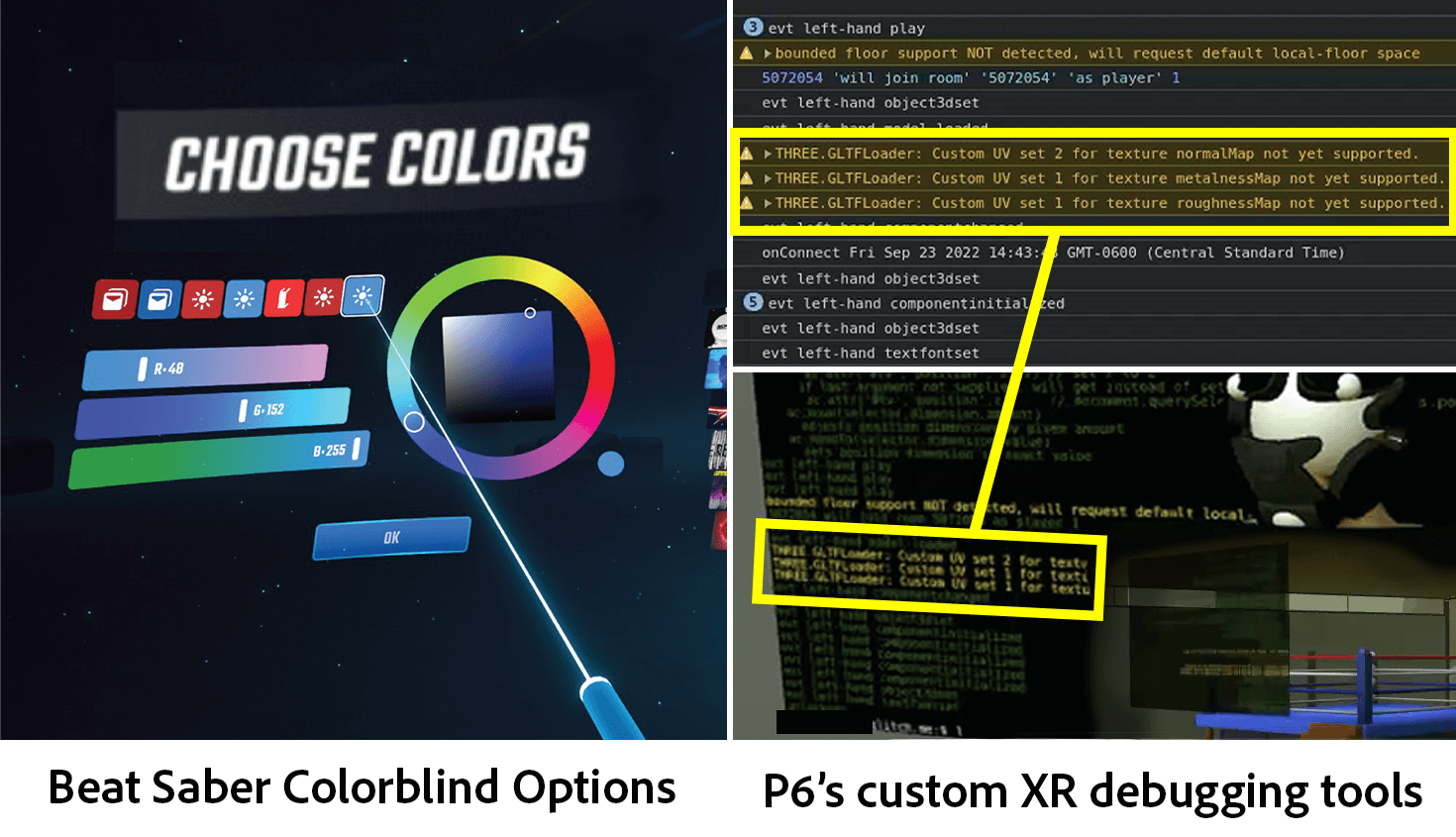

AROMA: Mixed-Initiative AI Assistance for Non-Visual Cooking by Grounding Multi-modal Information Between Reality and Videos

Zheng Ning, Leyang Li, Daniel Killough, JooYoung Seo, Patrick Carrington, Yapeng Tian, Yuhang Zhao, Franklin Mingzhe Li, Toby Jia-Jun Li

AI-powered cooking assistant using wearable cameras to help blind and low-vision users by integrating non-visual cues (touch, smell) with video recipe content. The system proactively offers alerts and guidance, helping users understand their cooking state by aligning their physical environment with recipe instructions.

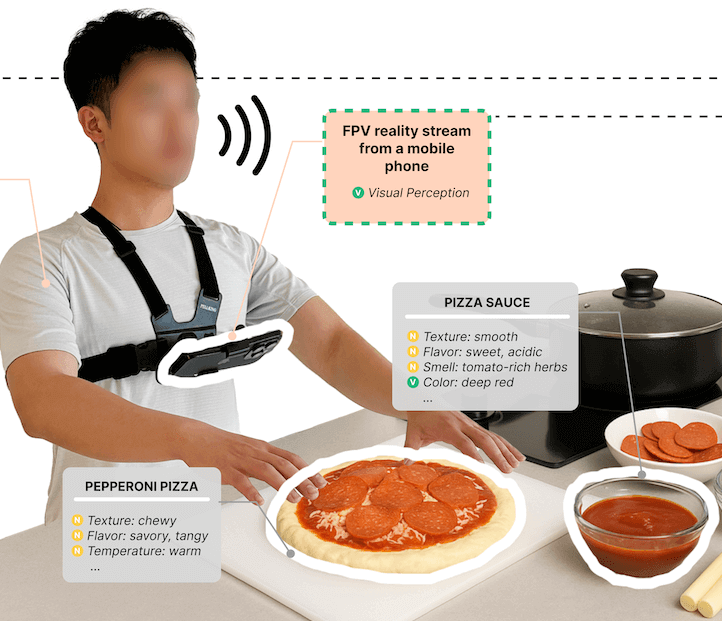

Understanding Mixed Reality Drift Tolerance

Daniel Killough*, Ruijia Chen*, Yuhang Zhao, Bilge Mutlu

Evaluating effects of mixed reality's tendency to drift objects on user perception and performance of task difficulty.

GazePrompt: Enhancing Low Vision People's Reading Experience with Gaze-Aware Augmentations

Ru Wang, Zach Potter, Yun Ho, Daniel Killough, Linda Zeng, Sanbrita Mondal, Yuhang Zhao

System using eyetracking to augment passages of text, supporting low vision peoples' reading challenges (e.g., line switching and difficult word recognition).

Exploring Community-Driven Descriptions for Making Livestreams Accessible

Daniel Killough, Amy Pavel

Making live video more accessible to blind users by crowdsourcing audio descriptions for real-time playback. Crowdsourced descriptions with 18 sighted community experts and evaluated with 9 blind participants.

SolAR Run: Using Augmented Reality to Promote Global Skin Cancer Prevention Efforts

Arman Farsad*, Daniel Killough*, Sahar Ali, Neha Momin, Sajani Patel, Thushani Herath, Lucy Atkinson, Erin Reilly

Two-part system leveraging advertising, game theory, and augmented reality to encourage young adults in Singapore and Texas to protect themselves against skin cancer. Image shows realistic cosmetic skin cancer effects on users' faces over time, as informed by collaborators in public health.

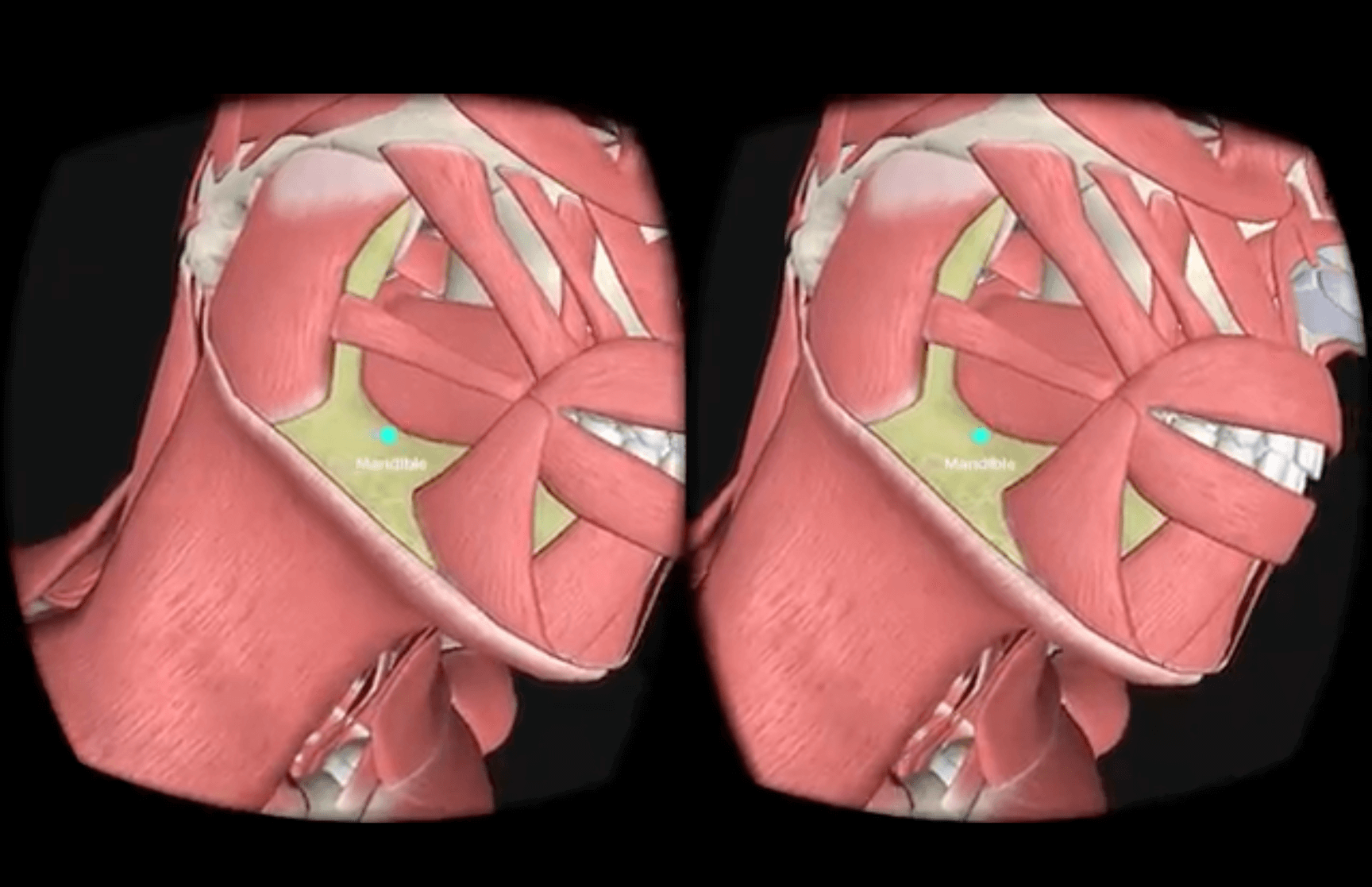

The Ability of Virtual Reality Technologies to Improve Comprehension of Speech Therapy Device Training

Daniel Killough

Senior thesis investigating the feasibility of virtual reality technologies for use in oral placement therapy training (speech therapy largely for kids with Down syndrome). Developed and evaluated system to convert existing 2D recordings to stereoscopic 3D for use with mobile VR. Recorded additional visuals to "bring" therapists into the presenter's context.